Introduction to "The Role of Computational Literacy in Computers and Writing"

Mark Sample, George Mason University Annette Vee, University of Pittsburgh

Enculturation: http://enculturation.net/computational-literacy (Published: October 10, 2012)

If we can measure the significance a new scholarly object by the number of innovative courses, breadth of engaging research, and buzz of online activity, then code has reached a critical moment in writing studies. Scholars such as N. Katherine Hayles and Espen Aarseth have long focused on the function of code in electronic texts, but ever since Mark Marino described the humanistic reading of code as “critical code studies” in 2006, the field has exploded. Often working at the level of code, the computer science scholars Michael Mateas and Noah Wardrip-Fruin are exploring games as narratives. Rhetoricians who program commercially such as Ian Bogost are similarly concerned with procedurality in new media, particularly game code. And works such as Bradley Dilger and Jeff Rice’s edited collection From A to <A> foreground code as a mode of writing. Of course, this work builds on early research by Paul Leblanc, Gail Hawisher, Cynthia Selfe, Jim Kalmbach, and Ron Fortune, all of whom endeavored to draw attention to the politics and composition of code in the 1980s and 1990s.

Code has not only made its way into our research, it has also found its way into our classrooms. Kevin Brock’s Code, Computation and Rhetoric class at North Carolina State University, Jamie Skye Bianco’s Composing Digital Media course at University of Pittsburgh, and James Brown Jr.’s Writing and Coding composition course at University of Wisconsin-Madison are all examples of writing classrooms that now include code. In programs like Communication, Rhetoric and Digital Media at NC State, there is a growing recognition that some expertise in computational thought is a part of future success as a scholar affiliated with the humanities. As David M Rieder has argued, code is blurring into our textual compositions, such that it’s no longer possible to bracket it off from writing pedagogy.

It may be that composition and rhetoric teachers are discovering what software developers already know. That is, from the perspective of computer science, programming has long looked like writing. Turing Award-winner Donald Knuth has argued for argued for “literate programming” and Frederick Brooks has drawn a parallel between a parallel between the programmer and the poet. More recent efforts under the name of “computer science for all,” “computer programming for everybody,” or “computational thinking”—promoted by computer science educators such as Mark Guzdial and Jeannette Wing—have sought to teach computing across the university curriculum, just as writing is now. These educators build on decades-old efforts by Seymour Papert (the designer of Logo) and John Kemeny and Thomas Kurtz (the creators of BASIC), who designed languages that would help a wider spectrum of people learn to code.

Beyond academia, popular initiatives and news stories have made the connection between coding and writing. For example, the Code Year initiative made a big splash in January 2012 with some good PR, as well as a promise by New York Mayor Michael Bloomberg that he would learn code this year; as of this writing, over 450,000 people have signed up to receive weekly code lessons via email. Massively Open Online Courses, or MOOCs, taught by professors at prestigious institutions such as Stanford and MIT have inspired tens of thousands of civilians to enroll in basic computer science courses. Writing for The Guardian in March 2012, John Naughton explains “Why all our kids should be taught to code.” Media theorist Douglas Rushkoff caused a stir in 2011, arguing, “In the emerging, highly programmed landscape ahead, you will either create the software or you will be the software. It's really that simple: Program or be programmed.”

It is against this backdrop that the Town Hall “Program or be Programmed: Do We Need Computational Literacy in Computers and Writing?” was proposed. The panel organizer, David Rieder, saw the growing interest in software studies and critical code studies, the ever-deepening engagement with computational approaches to the digital humanities, and his own local experiences teaching graduate courses on Arduino and Processing add up to an emerging interest in code and writing studies. Rieder asked Annette Vee to help plan and recruit panelists for the Town Hall, and both were delighted that writing and coding scholars Alexandria Lockett, Elizabeth Losh, Mark Sample, and Karl Stolley agreed to speak.

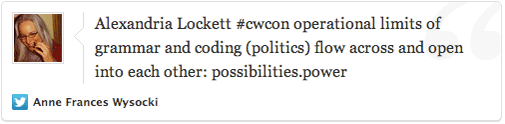

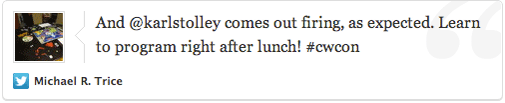

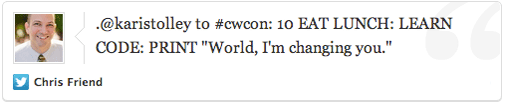

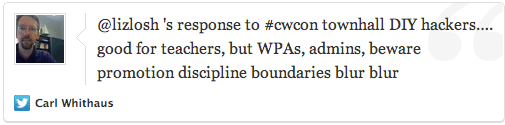

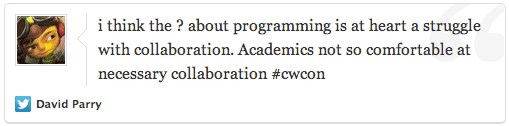

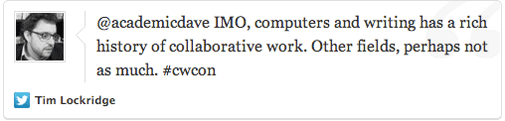

In this edited iteration of the Town Hall (you can watch the original on Vimeo, courtesy of Dan Anderson), we’ve provided a more polished version of each panelist’s script, along with a few sample tweets from the audience to represent the dialogue with the presenters. This Storify captures a more complete Twitter backchannel during the presentation. Lynn C. Lewis's review review for the University of Michigan's Sweetland Digital Rhetoric Collaborative offers additional context for the original presentations.

As the title of the Town Hall implies, the pieces below are meant to be provocative rather than thorough considerations of the role of code and computational literacy in computers and writing. Rieder begins by arguing against the logocentric bias of writing studies, and asks: why limit texts to their readability? Based on their power and ubiquity, we should understand processes, specifically algorithmic ones, as the new basis of writing. Vee picks up on Rieder's final assertion that those who don't code will be "stuck in the logocentric sands of the past" and breaks down what it means to have "coding" defined by a coterie of specialists. She asks: if coding is central to writing, shouldn't the values associated with good code be as diverse as the values associated with good writing? Yet we cannot ignore the social context already established for code, asserts Sample. Invoking historically saturated statements from the BASIC programming language, Sample outlines concerns about coding style, substance and interpretation to indicate code's social nature. The social nature of code is clear for Lockett, who relates coding culture to code-switching across versions of English and notes the "sponsorship" of a friend with whom she exchanged knowledge about political and rhetorical theory for knowledge about code. The tension between her assertion that she is "not a computer programmer" and her facility with Linux and various coding structures resolves—at least provisionally—in her embrace of the term "hacker," which gives her the latitude to argue for political awareness about the "socio-technical systems" associated with code and software. Finally, Stolley gives us a call to arms: in Computers and Writing, we must become familiar with the tools that define our field. It will not be easy to learn Unix, GitHub, Ruby, and Javascript, but Aristotle argued that "people become builders by building," Stolley reminds us, and ease of acquisition should not dictate what skills we choose to learn.

Responding to these various assertions, Losh reflects on what it might mean to teach and learn code within the context of Computers and Writing. To the anxious instructor struggling to teach students the complexities of writing, much less programming, Losh advises that we think of writing as an "informational art." This perspective can widen the approaches we take as well as the modalities we emphasize in our classes. But to the administrator who must now direct departments to account for programming's role in writing, she offers only tentative models rather than easy answers. Although we ultimately cannot provide an answer to the central question of the panel—"What is the role of computational literacy in computers and writing?"—we hope our provocations contribute to the continuing conversations about coding and composing in writing studies.

David M. Rieder: Programming Is the New Ground of Writing

David M Rieder, North Carolina State University

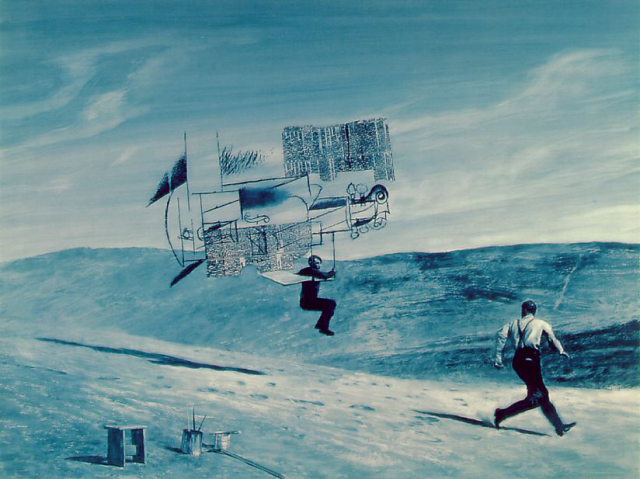

Figure 1. Mark Tansey’s Picasso and Braque

There are three reasons that I’m beginning this presentation with Mark Tansey’s painting, Picasso and Braque (see Figure 1 above).

First, the painting is reminiscent of Orville and Wilbur Wright’s first successful flight in Kill Devil Hills, North Carolina, which is just a few hours drive from the site of this conference. Second, at a conference on writing, many of Tansey’s paintings, including this one, celebrate text in one way or another—especially the printed word. In the painting above, Picasso is flying one of his collages, while Braque surveys his progress from the ground. The point related to Tansey’s interest in the printed word is that the flying surfaces of Picasso’s collage are made from swaths of newsprint. Arguably, his flying machine is a multimodal writing machine—albeit a curious-looking one because it appears to be more of a combination of kite and plane. It may be more accurate to say it’s a machine made from what look like Hargrave cells wrapped in words. Juxtaposed against the photograph below (see Figure 2), Tansey’s painting is a bit of a mash-up of histories of flying, Hargrave’s kites and the Wrights’ plane.

Figure 2. Wikipedia image of Hargrave (left) and Swain

But, getting back on track, in addition to these two points is the third, which includes my main point. In Tansey’s painting, the words printed on the swaths of newsprint, the writing, has broken free of its logocentric grounding. It’s a relative break (not an absolute deterritorialization) because the writing on those swaths of newsprint is still recognizable as writing—but the words have taken on a value relative to the flying machine that they serve. The words are still readable, but the point here is why bother reading them?

Or, rather (I don’t really mean to throw the baby out with the bathwater), why limit their value to their readability? The flying machine, the dynamic medium they now serve, invites us to step away from our conventional stance toward writing, which is writing at a standstill, and unground ourselves.

In computational media, writing wants to take a walk, not sit on a couch to be analyzed.1 Writing doesn’t need to be limited to the faithful representation of the spoken word—it never did, in fact. In computational media, our alphabet is motivated and dynamic. As Richard Lanham explains in The Economics of Attention, it is capable of thinking. In digital media, writing has expanded far beyond the limits of the logocentric tradition, but many of us continue to reinforce it in our teaching. In a comparison of print and digital textuality, Lanham explains that “all of our attention goes to the meaning of the text” in print-based media (81-2). But when text is digitized, meaning is less important than the ways in which it can move and be transformed. When it comes to computational media and the topic of this town hall, if writing can think (i.e., if it is mobile and transformable) then we should turn our focus away from content, which has diminishing value, to algorithmic forms and functions, which are the stasis of meaning in today’s attention economy.

So, my third point about Tansey’s painting, what I like about it, is that we’re all Picasso in flight, whether we know it or not.

How many of us were multi-tasking with tablets or laptops during the Computers and Writing conference, partly here, partly somewhere else? We’re in flight right now. But here’s the problem, or rather the challenge: while we’re all comfortable in flight, far fewer of us are willing or able to recognize how writing has taken flight, too.

The new ground, the new basis of writing is algorithmic. Today, the power and profit in writing has less to do with representing speech than serving a computationally-driven, generative process of creation. If you are teaching and practicing writing as a grounded, representational technology, you are missing the proverbial forest for the trees, the machine for the pages of newsprint.

I hope you’ll forgive the next and final provocation, which I fear smacks of what Jill Morris might characterize as the turn from geek to jerk, but, if you can’t write code, if you can’t think with code, if you can’t write algorithmically, you may eventually find yourself stuck in the logocentric sands of the past.2

Notes

1 In the opening pages of Anti-Oedipus, Gilles Deleuze and Felix Guattari write, “A schizophrenic out for a walk is a better model than a neurotic lying on the analyst’s couch” (2).

2In her presentation at Computers and Writing, Jill Morris reprimanded the geeks in the gaming community whose condescensions about insider know-how made them out to be jerks.

Annette Vee: Coding Values

Annette Vee, University of Pittsburgh

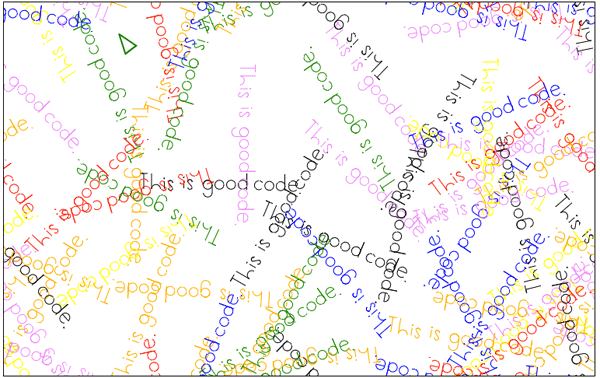

Today I want to talk about good code. Experienced programmers often think about what good code is. But they rarely agree.

And here’s what I want to say: they don’t agree on what good code is because there is no good code. Or, rather, there is no Platonic Ideal of Good Code. Like writing, there is no good code without context.

Unfortunately, when good code is talked about, it is often talked about as if there’s no rhetorical dimension to code. It’s talked about as though the context of software engineering were the only context in which anyone could ever write code. As if digital humanists, biologists, web hackers, and sociologists couldn’t possibly bring their own values to code.

I’ll give you just a couple of examples of how this happens, and what this means for us in computers and writing.

One of the earlier articulations of the supposed Platonic Ideal of Good Code was Edsger Dijkstra’s infamous “GOTO considered harmful” dictum, from 1968.

This article railed against unstructured programming and the GOTO command for its ability to jump from one place in a program to another without logical program flow. Dijkstra’s assertion was so provocative that “considered harmful” soapboxes have proliferated in programming literature and the Web and the “harm” done by goto has been hilariously depicted in an XKCD comic. Many of us first learned the joy of coding through the languages that used the GOTO command such as BASIC. But Dijkstra’s statement suggests that the context of the software production workplace should override all other possible values for code. This is fine—as far as it goes, which is software engineering and computer science. But this kind of statement of values is often taken outside of those contexts and applied in other places where code operates. When that happens, the values of hacking for fun or for other fields are devalued in favor of the best practices of software engineering—that is, proper planning, careful modularity, and unit testing. The popular Ruby figure who went by the name “Why the Lucky Stiff” makes this point about conflicting values between software engineering and hacking forcefully and whimsically in “This Hack Was Not Properly Planned.”

Here’s a more recent invocation of the Platonic Ideal of Good Code. In this comment, we can see that the values of software engineering are more tacit, and more problematic:

Every piece of academic code I have ever seen has been an unmitigated nightmare. The sciences are the worst, but even computer science produces some pretty mind-crushing codebases.

-”Ender7” on HackerNews

“Ender7” is replying here to a thread about a recent Scientific American story that suggested scientists were reluctant to release the code they used to reach their conclusions, in part because they were “embarrassed by the ‘ugly’ code they write for their own research.” According to Ender7, they should be ashamed of their code. Ender7 goes on to say:

So, I don’t blame them for being embarassed to release their code. However, to some degree it’s all false modesty since all of their colleagues are just as bad.

Why is academic code an “unmitigated nightmare” to Ender7? Because it’s not properly following the rules of software engineering. Again, the rules of software engineering presumably work well for them. I’m not qualified to comment on that. But that doesn’t mean that those values work for other contexts as well, such as biology. In this example, software engineering’s values of modularity, security, and maintainability might be completely irrelevant to the scientist writing code for an experiment. If scientists take care to accommodate these irrelevant values, they may never finish the experiment, and therefore never contribute to the knowledgebase of their own field. The question, then, isn’t about having good values in code; it’s about which values matter.

We often hear how important it is to have proper grammar and good writing skills, as if these practices had no rhetorical dimension, as if they existed in a right or wrong space. But we know from writing studies that context matters. Put another way: like grammar, code is also rhetorical. What is good code and what is bad code should be based on the context in which the code operates. Just as rhetorical concepts of grammar and writing help us to think about the different exigencies and contexts of different populations of writers, a rhetorical concept of code can help us think about the different values for code and different kinds of coders.

And this is how coding values are relevant to us in computers and writing. The contingencies and contexts for what constitutes good code isn’t always apparent to someone just beginning to learn to code, in part because the voices of people like Ender7 can be so loud and so insistent. We know from studies on teaching grammar and writing that the overcorrective tyranny of the red pen can shut writers down. Empirical studies indicate it’s no different with code. Certainly there are ways of writing code that won’t properly communicate with the computer. But the circle of valid expressions for the computer is much, much larger than Ender7 or Dijkstra insist upon.

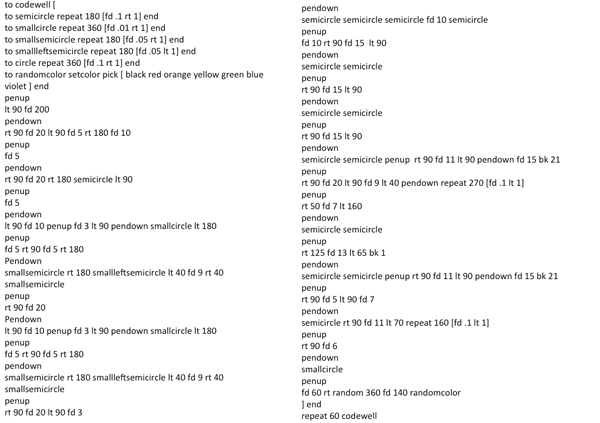

To close, I want to share with you a bit of what might be considered very ugly code, a small Logo program I call, tongue-in-cheek, “codewell”:

This is bad code because:

- it is uncommented and hard to read

- it is in an old, seldom-used language

- it is baggy and has repeated statements that should be rewritten as functions

- it is not modular or reusable

- it is an “unmitigated nightmare”

If you run the code [on github here] in a LOGO interpreter, it looks like this:

So, in addition to saying my code sucks, you could also say this:

- it could be used to teach people some things about functions and code

- it is a start for a LOGO library of letters that might be kind of cool

- it does what I want it to do, namely, make my argument in code form.

Let’s imagine a world where coding is more accessible, where more people are able to use code to contribute to public discourse or solve their own problems, or just say what they want to say. For that to happen, we need to widen the values associated with the practice of coding.

To Edsger Dijkstra, I’d say: coding values that ignore rhetorical contexts and insist on inflexible best practices or platonic ideals of code should be considered harmful – at least to the field of computers and writing.

Mark Sample: 5 BASIC Statements on Computational Literacy

Mark Sample, George Mason University

I want to run through a list of five basic statements about computational literacy. These are literally 5 statements in BASIC, a programming language developed at Dartmouth in the 1960s.

BASIC is an acronym for Beginner’s All-Purpose Symbolic Instruction Code, and the language was designed in order to help all undergraduate students at Dartmouth—not just science and engineering students—use the college’s time-sharing computer system (Kemeny 30).

Each BASIC statement I present here is a fully functioning 1-line program. I want to use each as a kind of thesis—or a provocation of a thesis—about the role of computational literacy in computers and writing, and in the humanities more generally.

10 PRINT 2+3

I’m beginning with this statement because it’s a highly legible program that nonetheless highlights the mathematical, procedural nature of code. But this program is also a piece of history: it’s the first line of the first program in the user manual of the first commercially available version of BASIC, developed for the first commercially available home computer, the Altair 8800 (Altair BASIC Reference Manual 3). The year was 1975 and this BASIC was developed by a young Bill Gates and Paul Allen. And of course, their BASIC would go on to be the foundation of Microsoft. It’s worth noting that although Microsoft BASIC was the official BASIC of the Altair 8800 (and many home computers to follow), an alternative version, called Tiny BASIC, was developed by a group of programmers in San Francisco. The 1976 release of Tiny BASIC included a “copyleft” software license, a kind of predecessor to contemporary open source software licenses (Wang 12). Copyleft emphasized sharing, an idea at the heart of the original Dartmouth BASIC, which, after all, was designed specifically to run on Dartmouth’s revolutionary time-sharing computer system (Kemeny 31-32).

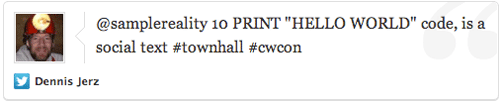

10 PRINT “HELLO WORLD”

If BASIC itself was a program that invited collaboration, then this—customarily one of the first programs a beginner learns to write—highlights the way software looks outward. Hello, world. Computer code is writing in public, a social text.

Or, what Jerry McGann calls a “social private text.” As McGann explains, “Texts are produced and reproduced under specific social and institutional conditions, and hence…every text, including those that may appear to be purely private, is a social text” (McGann 21). Not coincidentally, “Hello World” is also the first post the popular blogging platform WordPress creates with every new installation.

10 PRINT “GO TO STATEMENT CONSIDERED HARMFUL”: GOTO 10

My next program is a bit of an insider’s joke. It’s a reference to a famous 1968 diatribe by Edsger Dijkstra called “Go To Statement Considered Harmful.” Dijkstra argues against using the goto command, which leads to what critics call spaghetti code. While Annette Vee addresses that specific debate, I want to call attention to the way this famous injunction implies an evaluative audience, a set of norms, and even an aesthetic priority. Programming is a set of practices, with its own history and tensions. Any serious consideration of code—any serious consideration of computers—in the humanities must reckon with these social elements of code.

10 REM PRINT “GOODBYE CRUEL WORLD”

The late German media theorist Frederich Kittler has argued that, as Alexander Galloway put it, “code is the only language that does what it says” (Galloway 6). Yes, code does what it says. But it also says things it does not do. Like this one-line program which begins with REM, short for remark, meaning this is a comment left by a programmer, which the computer will not execute. Comments in code exemplify what Mark Marino has called the “extra-functional significance” of code, meaning-making that goes beyond the purely utilitarian commands in the code.

Without a doubt, there is much even non-programmers can learn not by studying what code does, but by studying what it says—and what it evokes.

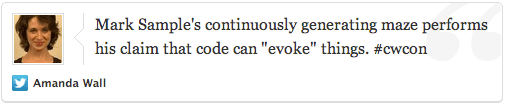

10 PRINT CHR$(205.5+RND(1));:GOTO 10

Finally, here’s a program that highlights exactly how illegible code can be. Very few people could look at this program for the Commodore 64 and figure out what it does. This example suggests there’s a limit to the usefulness of the concept of literacy when talking about code. And yet, when we run the program, it’s revealed to be quite simple, though endlessly changing, as it creates a random maze across the screen.

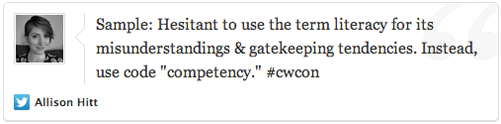

So I’ll end with a caution about relying on the word literacy. It’s a word I’m deeply troubled by, loaded with historical and social baggage. It’s often misused as a gatekeeping concept, an either/or state; one is either literate or illiterate.

In my own teaching and research I’ve replaced my use of literacy with the idea of competency. I’m influenced here by the way teachers of a foreign language want their students to use language when they study abroad. They don’t use terms like literacy, fluency, or mastery, they talk about competency. Because the thing with competency is, it’s highly contextualized, situated, and fluid. Competency means knowing the things you need to do in order to do the other things you need to do. It’s not the same for everyone, and it varies by place, time, and circumstance. Competency also suggests the possibility of doing things, rather than simply reading or writing things.

Translating this experience to computers and writing, competency means reckoning with computation at the level appropriate for what you want to get out of it—or put into it.

Alexandria Lockett: I am Not a Computer Programmer

Alexandria Lockett, Pennsylvania State University

I am not a computer programmer. My ability to execute a programming language extends as far as hypertext mark-up language (html), which I learned in the days of crude computing better known as Web 1.0. However, these past couple years, I have been using Ubuntu, the user-friendly Linux desktop environment founded by Mark Shuttleworth. Ubuntu is a Bantu word which means “humanity unto others.” This concept not only inspired this piece, but embodies the spirit of generosity embedded in open-source participation.

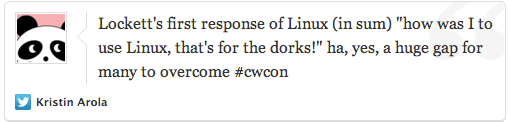

After a couple of weeks, Ubuntu seemed to completely revitalize my cheap old laptop. The system always booted immediately and it didn't freeze up when I ran multiple programs. Through Ubuntu, I gained access to multiple workspaces, as well as the Ubuntu Software Center, where I could download and try hundreds of free applications. Still perplexed that I didn't have to pay for the operating system, I asked my neighbor (who would soon become one of my best friends), "Why everyone didn’t drop their costly, barely functioning Windows OS’s?" Open Source, it seems, thrives on synchronicity. The moment I conspired to murder my machine, a computer programmer appeared at my front door to help me move a heavy piece of furniture. That day my mind was permeated with my technological conflict. I never wanted to use Windows again, and I couldn’t afford the expensive membership fees of the iCult. My consumer power seemed muted by my sense that each company was my only option. Of course, my entire technological socialization process in American public schools involved two options: Mac or PC. Both of these companies’ global dominance—over market shares, GUI’s, and hardware designs—made me feel like I was somehow committing some awful sin when I lusted after the possibility of having additional computing choices. The good neighbor suggested that I stop using proprietary software altogether and consider switching to Linux. I heard the word Linux and I automatically felt crushed. I remembered my high school dork friends with their terminals and their occasional visits with the FBI, on account of their cracking credit cards. How was I to use Linux?

Since I could burn ISO files, I was thrilled I could test out Ubuntu on my sluggish machine. Not only was it super easy to use, but I was pissed that I didn’t know this option was available to me. Moreover, I felt dumb not knowing that it wasn’t necessary to buy a new computer: Why couldn’t I differentiate between a hardware and a software problem? Why had I failed to distinguish a computer from a kitchen appliance such as a blender or toaster—objects you toss in the garbage when they no longer work? The Mac/PC binary logic informing the tiny section of my brain devoted to ‘technology and troubleshooting’ blurred the communication functions occurring between these two entities entirely.

I asked my neighbor, who would soon become one of my best friends, why everyone didn’t drop their costly, barely functioning Windows OS’s. After hundreds of thousands of hours, over many long days and long nights, I understood concepts like modularity and proprietary lock-in. In fact, I encouraged him to use the latter concept as a framework for an introduction to computer science course. We discussed some of the ways in which we could resist his pedagogical training. He was frustrated with the textbook and lesson plans because these materials took for granted that C++, albeit the industry standard, was the best or only programming language students should learn. Although the roboticist was required to teach over one hundred and fifty students C++, I urged him to compose his own textbook so that everyone could enjoy two major learning opportunities that integrate technical knowledge and civic participation

First, the format and content of the book would both dismantle the idea that computer science courses should be apolitical. Wikis, by nature, indicate that a given project is too vast for one author due to its need for ongoing, frequent revision. They are never ‘done,’ so to speak. Furthermore, students’ participation on the wiki placed the burden of ‘textbook’ authorship on the entire class, which would decentralize my friend’s role as the sole source of knowledge. He knew that the wiki-format would require students to demonstrate their inquisitiveness. Additionally, he could track and evaluate user participation, which helped him customize lesson plans and additional wiki content with their concerns and abilities in mind. As a result, students practiced three core values of critical pedagogy and hacking: collaboration, active participation, and a curiosity about how things work. Next, he could include supplementary material on social issues in computer programming and computer science, as well as career advice for interested students. Using the hacker name ‘Anova8,’ I collaborated with him throughout the summer on some of this Media Wiki-powered book. Indeed, I received a most interesting peer-to-peer education regarding the fundamentals of computer science while he learned about composition and rhetoric pedagogy. In exchange for his knowledge about types, variables, operators, loops, arrays, and structures, he acquired key lessons in Jane Addams’ radical pragmatism, Jacqueline Jones Royster’s definition of literacy for socio-political action, Critical Pedagogy, and Resistance Theory. We established that programming instruction was inextricably related to and dependant on writing expertise and opportunities for persuasion. Both writing and programming receive widespread attention as subjects in which few can do well, but nearly everyone needs to learn. Since these practices are so deeply embedded in many people’s daily lives, such subjects have a strong influence on how we perceive and measure social progress. However, the dynamic, social, experiential instruction that help us learn these techne is often overlooked.

Through my neighbor’s literacy sponsorship, I came into contact with sites like Slashdot, ReadWriteWeb, and Reddit. I wasn’t interested in “becoming” a member of this community, but I did want to know more about the people who wrote the code—their personal experiences, how they interacted. I wanted to visualize their values, beliefs, and ways of seeing the world. I eagerly read Richard Stallman’s Manifesto, learned more about Linus Torvalds, Eric Raymond, Alan Turing, Steven Wozniak, Larry Page, Sergey Brin, and Ward Cunningham. When my printer and scanner hard drives weren’t compatible with Ubuntu, I perused many discussion threads and figured out how to identify credible advice on how to install them (this was before the HPLIP was accessible in the Ubuntu software center). Successful installation was proof that I could trust the counsel of others. However, I will admit that I did not bother to try out any instructions written by any poster whose post didn't consist of well-sequenced, highly detailed complete sentences. Soon, I began to recognize the characteristics of the Free and Open Source Software Community and its massive impact on other communities. It had a complex history, social languages, intertextuality (i.e. GNU’s not Unix), discourses, conversations. All the ‘stuff’ of a discourse community. Software developments that facilitate(d) web 2.0, our dependence on ‘free’ applications, and Linux servers running major businesses and the interwebs is striking evidence of its power.

Indeed, I am not a computer programmer, but I began to recognize several commonalities between my perspective as a writing teacher and my friend’s perspective as a programmer. The objectives of having access to code, tinkering with the code, running programs for any purpose, and sharing improvements with the community so everyone benefits were tantamount to my pedagogical approaches to rhetoric and composition. Shortly after I changed my operating system to Ubuntu, I renewed connections with those “dork” friends from high school, who were excited to talk about Linux--and hacking, friendship, consciousness, trance music, and the earth's future. Our long conversations helped me recognize what we had in common all along: a love of wit and play, an insatiable curiosity for learning about how things (and people) work, and a knack for solving problems.

I do not feel as if I need to be a programmer to faithfully represent and perform values held by practitioners of Computers and Writing. I have repeatedly stated that I am not a computer programmer, but I am a hacker. I am able to recognize how bureaucratic linguistic practices inhibit me from “tinkering” with language. I see how the humanities’ obsession with authors and owners inhibits many people from collaborating, dialoguing about, and innovating scholarly research. In fact, I’ve been on the border my whole life—switching back and forth from Standard White English and African American Vernacular English, Midwest Plain Style and Decorous Southern Speech. I’ve used the invisibility and visibility of my identity to push the limits of argumentation beyond the confines of ‘normality.' I’ve been translating language as long as I can remember, trying to understand these rules, poking fun at them, playing with them, succeeding or failing at remixing and subverting them to open up new paths for language use.

Computational literacy should include a wider range of competencies besides just ‘technical’ literacy. Coding is a dynamic performance, a demonstration of various levels of competency including: how and why I do code or whether or not I can recognize the ways in which the code influences specific programs I choose to run--in both human and machine interactions. In particular, working with multilingual writers in the writing center for the past year has enabled me to recognize the benefits of using computing as a metaphor for helping them understand grammar. For instance, their understanding of the article as an operation for quantity, or a marker with the capacity to establish degrees of specificity, enables them to go beyond a mechanical tendency to always put ‘the’ in front of a noun. Rather, these writers can now decide whether or not they should:

- transform girl to a proper noun and eliminate the need for an article altogether

- indicate how many ‘girls’ exist within that context

- specify what ‘the girl’ is doing, or where she may be located

By understanding what an article does, multilingual writers can practice grammar with their communication aims, instead of a textbook's static examples, at the forefront of their decision-making. Through my pedagogical and personal experiences with language, I’ve learned to understand the operational limits and potentials of grammar as code, and recognize its inseparable relationship to technical, cultural, and political ecologies. This talk, for instance, is a program I’m running to facilitate trust between us so that we can acknowledge the value of anyone who wants to tinker with these discourses for the benefit of helping others quench their thirst for knowledge or find out which beverage motivates them to pursue their drink.

I am not a computer programmer, but should I desire to close the wide gap that exists between what I need to know and what I can possibly learn to become one, I have access to every resource available to me to do so vis-a-vis open educational resources. I don’t know C++, Ruby, Python, or Perl, but I was able to get a sense of the politics surrounding their developments and implementations by visiting their websites, reading discussion forums, current events, trade and scholarly journal articles, and talking to individuals teaching and learning these languages. I highly benefit from experiencing intertwingularity, or the principle that governs the architecture of these collaborative feedback systems whose countless distributed autonomous communities defies unproductive arbitrary hierarchies. I do not teach with technology without discussing the power distributions of socio-technical systems, the ethical responsibilities they inflict upon human users, and the ways in which our grammatical code and linguistic arrangements make it difficult for us to talk about emergence. We need hackers of all gradients—from the computer programmer to the radical pragmatist instructor to the DJ to the comic—to resolve the broader issues of helping students develop enough confidence and generosity to hack language, improve their writing, and self-consciously participate in a much broader effort to do humanity unto others.

Karl Stolley: Source Literacy: A Vision of Craft

Karl Stolley, Illinois Institute of Technology

Introduction

For the things we have to learn before we can do them, we learn by doing them. E.g., [people] become builders by building.

- Aristotle, Nicomachean Ethics, Book II, Chapter 1

“Program, or be Programmed.” That’s a dire warning. And being a firm believer in the importance of computational literacy, or source literacy as I prefer to call it, I’ve issued more than my fair share of dire warnings: What happens if we only write in other people’s apps, other people’s text boxes. What happens if we think ourselves so privileged as a field that we can pick and choose from the digital buffet of what will and will not be our concerns.

But for the sake of this short polemic, I won’t issue any dire warnings. I won’t make any direct appeals as to why every member of the Computers and Writing community should immediately learn to program. I won’t even want to argue with anyone about whether we should pursue source literacy. For me, the answer is an obvious and unqualified Yes. But debate or even sharing abstract ideas alone is not persuasive. When it comes to programming, like swimming or any other activity whose lived experience differs greatly from its perception or description, only hands-on, long-term experiences will persuade.

What I can offer here is my vision for the field of Computers and Writing. I want to share what it is that we could do and be if we all make learning to program a routine component of our writing and teaching. I will conclude with four things that each member of the field can do immediately to make this vision a reality.

My Vision

Do not automate the work you are engaged in, only the materials.

- Alan Kay, “Microelectronics and the Personal Computer" (244)

Because this is a vision, I’m going to use the present tense. Not the future tense.

My vision for Computers and Writing places craft at the center of what we do. And what we do is digital production. We make things from raw digital materials: open-source computer languages and open formats. Which is to say, we write digital things. To write digital things, we rely on a strong command of source literacy.

There are no language tricks with the verb write in this source-literate field I envision. Writing is not a metaphor to explain how writing is accomplished by clicking through a WYSIWYG that generates and automates our work for us. The verb write is literal.

Everyone, of course, writes with web standards: HTML, CSS, and JavaScript. Above all else, the field values digital projects that are accessible from any browser, on any device. In my vision, that value has become pervasive; it’s rare anymore to hear of a writing assignment that doesn’t require students to adhere closely to web standards.

And while debates rage over different markup and design patterns, the level of discourse and collaboration regarding digital craft has never been more sophisticated. In fact, in the field as I envision it, several members of the Computers and Writing community are now active participants in the working groups that oversee the development of web standards specifications.

But the really remarkable change has been the number and diversity of open-source software projects initiated and maintained by members of the field I envision. It’s unusual to find someone in Computers and Writing who doesn’t have a GitHub account and a favorite language, or who doesn’t attend the special interest group devoted to that language at the 4Cs each year.

From all of this digital production activity, there has been a renaissance in publishing on digital craft in our field. Numerous digital book titles by researchers in Computers and Writing routinely appear on must-read lists outside the field, especially for programmers and developers whose own professional practice has been greatly augmented by a rhetorical approach to thinking about and doing the work of digital craft.

How did this sense of craft emerge? By rejecting a model of computing that is suited to office cubicles and deskilled writers. By embracing, instead, a deep appreciation for the raw materials, the languages, of the digital medium, and seeing digital writing as more than the on-screen result of the machinations of commercial software.

“Craft,” in the words of Malcolm McCullough, “is commitment to the worth of personal knowledge” (246). It is commitment to research and learning over technical support and intuitive interfaces. Craft is ultimately the sense of taking responsibility for the digital writing that we unleash on the world. It is the thrill and wonder of watching our collective work emerge from the thousands of lines of hand-written source code that make up the eBooks and web applications that members of the field write every single year.

How to Make this Vision a Reality

Here are four means to making this vision a reality. But do not think of them as steps. Rather, think of them as representing a radical shift in digital production that centralizes craft: a shift that you either adopt, or reject.

1. Learn a Unix-like operating system at its command line.

Whether you have a shiny new Mac with the latest version of OS X or run Ubuntu Linux on an old computer you have laying around, the first step to source literacy is to get comfortable with relying on the keyboard to interact with the computer.

2. Commit to writing in a text editor with good syntax highlighting, and start writing HTML and CSS.

For everything. Old habits hold people back more than the challenges of learning something new. Even if ultimately you have to submit something, like a journal article, in Microsoft Word, do your composing in HTML.

3. Learn the distributed version control system Git, and establish a GitHub account.

Experimentation, expansive learning, and even simple revision are all impossible if we’re shackled to the regular file system of a computer, where versioning happens by renaming files. If the computer is, as Steve Jobs said, a bicycle for the mind, then Git is a time machine and guardian angel for the mind’s productive digital work.

4. Learn a couple of web-oriented, open-source programming languages.

JavaScript and Ruby are the two languages I wish everyone knew, on top of HTML and CSS. Despite its reputation, JavaScript has matured into an important language, as evidenced by server-side applications such as Node.js. Ruby, courtesy of development frameworks such as Rails and Sinatra, is quickly becoming the language of choice to power web applications. But Ruby has utility in many other domains, such as programming command-line applications that can extend and deepen command-line literacy beyond basic shell scripting.

I don’t expect any of this will be easy. I engage regularly in all four of the practices above, and have for many years. Yet I still consider myself a learner. And being a learner and valuing learning as itself both craft and challenge, I cannot help but summarily reject ‘ease’ as a measure of any craft that is valuable or worthwhile.

Elizabeth Losh: The Anxiety of Programming: Why Teachers Should Relax and Administrators Should Worry

Elizabeth Losh, University of California, San Diego

The central concept of this panel “Program or Be Programmed” might immediately bring up performance anxiety issues for many composition professionals in the audience. As Stephen Ramsay put it recently, the very notion of the tech-savvy digital humanities as the newest “hot thing” tends to bring up “terrible, soul-crushing anxiety about peoples’ place in the world.” For those in composition, the anxiety might be even more acutely soul-crushing in light of existing labor politics. Every time the subject of learning code comes up, one can almost see the thought balloons appearing: “How can I learn Python in my spare time when I can’t even see over the top of the stack of first-year papers that I have to grade?”

And for those who care about inclusion, what does it mean to choose the paradigm of computer programming culture, where women and people of color so frequently feel marginalized?

Furthermore, if all these powerful feelings are being stirred up, what questions should we be asking about ideology as an object of study. For example, at the 2010 Critical Code Studies conference, Wendy Chun argued that a desire for mastery over blackboxed systems or access to originary source code shows how a particular dialectic of freedom and control makes it difficult for us to have meaningful discussions about technology and to acknowledge our own limited access to totalizing understanding, even if one might be a software engineer.

Fortunately, after reading the arguments above, our audience should feel a little less anxious as they think about teaching writing as an information art. They should know that doing-it-yourself means doing-it-with-others, whether it is imagining Picasso and Braque building a flying machine, as David Rieder suggests, or installing Ubuntu with the help of a neighbor, as Alexandria Lockett describes. The message to instructors is ultimately comforting: relax, be confident in your own abilities to learn new things, ask questions, facilitate the questions of others, and network in ways that help you make new friends.

However, if you are an administrator as well as an instructor, don’t get too relaxed just yet. These talks also bring up some very thorny questions about disciplinary turf. After all, who defines how digital literacy should be taught and who will teach it? Computer scientists? Media artists? Librarians? Writing studies people?

Although he uses the word “craft,” Karl Stolley asserts that “source literacy” doesn’t require an elaborate apprenticeship. All it takes is moving toward a set of everyday common-sense practices involving taking control of command lines and file structures. Mark Sample suggests the term “code competency” as an alternative to “code literacy,” because of all the cultural baggage associated with the word “literacy” itself. Trebor Scholz has suggested “fluency” as a better characterization of what we are trying to teach, but Sample notes the limitations of that term.

In a 2010 essay called “Whose Literacy Is It Anyway?” Jonathan Alexander and I pointed to Michael Mateas’s work on “procedural literacy” as a way for compositionists to begin to engage with these issues. Mateas worries that universities are often too eager to adopt the training regimes of computer science departments, which is great for graduating computer science majors but not so great for teaching students in other majors or with other passions to use code. Mateas also argues that programming languages like Processing are needed in these curricula, because they can be customized by advanced users outside of technical fields while provide scaffolding for beginners to learn languages like Java and C++. (For more on Processing, see my my “DIY Coding” interview with the language’s co-creator Casey Reas.)

So what should be the relationship between writing studies and computer science in the academy? The collegiality common between computer science and computers and writing only gets us so far in our responsibility to teach computational literacy.

Both Sample and Vee mention Edsger Dijkstra, who was also the author of “On the Cruelty of Really Teaching Computer Science,” a decidedly anti-humanistic diatribe on the superiority of formal logic and mathematics as the keys to supposedly real knowledge. Dijkstra’s legacy still lives on in many computer science departments, and it is often difficult to have rhetoric taken seriously by stakeholders in many other STEM disciplines. The core curriculum in the Culture, Art, and Technology program that I direct has a digital literacy requirement that generally involves taking a programming course in the computer science department, but not every student feels comfortable crossing those bridges between disciplinary norms.

If there are answers to the questions of who should teach computational literacy, then we have not yet found them. But I’ll put in my own GOTO command for writing studies to keep this spaghetti-like discussion going.

Conclusion

Annette Vee, University of Pittsburgh

Programming has undeniably made its way into Computers and Writing through our research and teaching, but the role of computational literacy in the field is still in flux. Although we have offered arguments for the importance of computational literacy or competency, we have not offered solutions for how and where to teach this critical skill. We offer only suggestions: the digital literacy requirement that Losh mentions is just one administrative attempt to give students outside of computer science access to programming. Many of us in the field have found space in our syllabi for basic web design or graphical programming in Processing, even if we do not all teach Ruby like Stolley. Yet these practices cannot scale up to 50 sections of first year composition, or across to our colleagues who are expert teachers of writing but not as skilled in digital forms of composition.

What, then, should we teach about programming in our writing classes? As Lockett argues, the politics of software shape our compositions and communications, and we cannot fail to grasp the basic socio-technical mechanisms behind that. If we consider critical thinking part of our composition teaching mission, critical thinking about our software now seems tantamount to critical thinking about other forms of media that we currently teach in our classes.

But teaching programming per se? Given the diversity of approaches to composition across our field—from rhetorical argument to multimodality to critical engagement—it would be folly to propose a one-size-fits-all solution for how we might fit computational literacy into composition. Each university and each instructor must consider the concerns, futures, and backgrounds of their students along with their local resources before coming to conclusions about the role of computational literacy in their courses.

For now, we hope this collection of provocations has helped put computational literacy on the map of the field of Computers and Writing, but also of writing studies more generally. The algorithmic processes of programming now form a new ground for writing—one that might make us anxious, but one that should invigorate us as well. We teach and compose in writing, and as we expand the modes by which we define writing, we expand its potential as an informational art.

Works Cited

Altair BASIC Reference Manual. Albuquerque: MITS, 1975. Print.

Aristotle, Nichomachean Ethics. Trans. W.D. Ross. The Internet Classics Archive. Web. 29 Aug. 2012.

Deleuze, Gilles and Felix Guattari. Anti-Oedipus: Capitalism and Schizophrenia. Trans. Robert Hurley, Mark Seem, and Helen R. Lane. Minneapolis: University of Minnesota, 1983. Print.

Dijkstra, Edsger. “Letters to the Editor: Go To Statement Considered Harmful.” Communications of the ACM 11.3 (1968): 147–148. ACM Digital Library. Web. 14 Jan 2011.

Galloway, Alexander R. Gaming: Essays on Algorithmic Culture. Minneapolis: University of Minnesota Press, 2006.

Kay, Alan. “Microelectronics and the Personal Computer.” Scientific American September 1977: 230-244.

Kemeny, John G. Man and the Computer. New York: Scribner, 1972. Print.

Lanham, Richard. The Economics of Attention: Style and Substance in the Age of Information.

Chicago: The University of Chicago Press, 2006. Print.

McCullough, Malcolm. Abstracting Craft: The Practiced Digital Hand. The MIT Press, 1998. Print.

McGann, Jerome. The Textual Condition. Princeton, NJ: Princeton University Press, 1991. Print.

Tansey, Mark. Picasso and Braque. Los Angeles County Museum of Art, Los Angeles, CA.

Painting.

Wang, Li-Chen. “Palo Alto Tiny BASIC.” Dr. Dobb’s Journal of Computer Calisthenics & Orthodontia, Running Light without Overbite May 1976: 12–25. Print.